At the occasion of the visit of Dr Mehr in IRCAM this month, the CREAM and Analyse des Pratiques Musicales (APM) teams are happy to organize a art-and-science mini-symposium on the Cultural Evolution of Music, in which Dr Sam Mehr (Dept. Psychology, Harvard, MA) will report on his recent Natural History of Song project, Dr Nicolas Baumard (Dept. Cognitive Science, Ecole Normale Supérieure, Paris) on his research on the evolution of emotions using portraits, and contemporary composer Pascal Dusapin will describe his ongoing Lullaby Experience project. The symposium is free and open to all, subjected on seat availability.

Update (18/4): The participation of Pascal Dusapin has been cancelled due to unforeseen circumstances.

Date: Thursday April 18th

Hours: Afternoon, 15h-18h

Place: Salle Stravinsky, Institut de Recherche et Coordination en Acoustique/Musique (IRCAM), 1 Place Stravinsky 75004 Paris. [access]

Local organizers: Clément Canonne (APM), Jean-Julien Aucouturier (PDS/CREAM), IRCAM/CNRS/Sorbonne Université.

Additional lecture: As a companion to the event, Dr Sam Mehr will also give an additional lecture on the origins and functions of music in infancy at Ecole Normale Supérieure, Friday April 19th, morning. (see information below)

Thursday April. 18th, 15h-18h, IRCAM

The Cultural Evolution of Music

15h-16h – Dr. Sam Mehr (Harvard University, MA, USA)

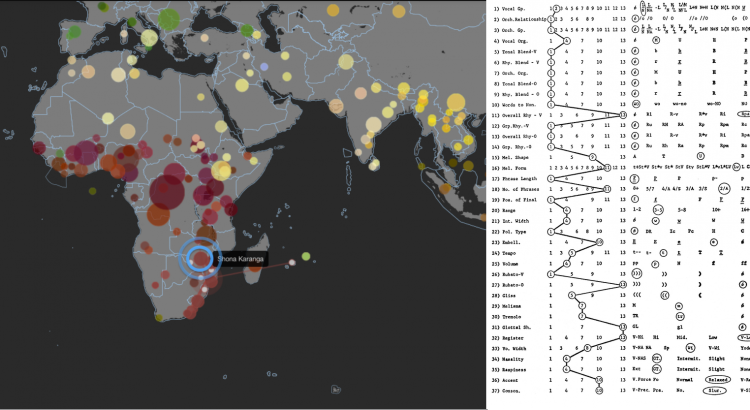

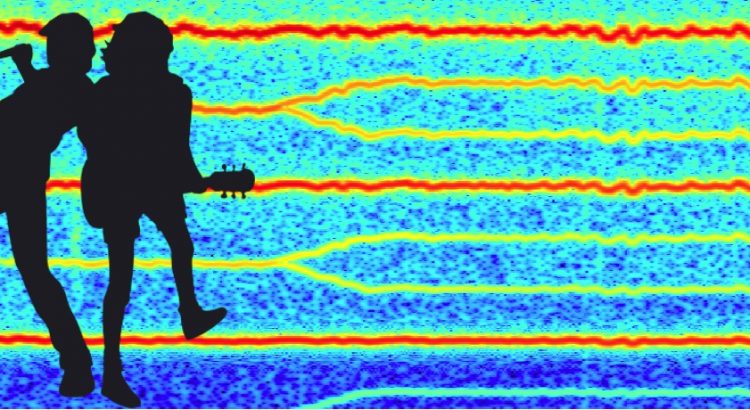

A natural history of song

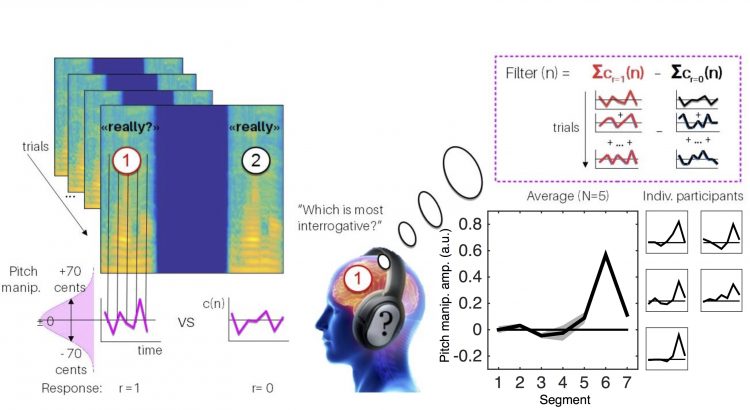

Theories of the origins of music claim that the music faculty is shaped by the functional design of the human mind. On these ideas, musical behavior and musical structure are expected to exhibit species-wide regularities: music should be characterized by human universals. Many cognitive and evolutionary scientists intuitively accept this idea but no one has any good evidence for it. Most scholars of music, in contrast, intuitively accept the opposite position, citing the staggering diversity of the world’s music as evidence that music is shaped mostly by culture. I will present two papers that attempt to resolve this debate. The first, a pair of experiments, shows that the musical forms of songs in 86 cultures are shaped by their social functions (Mehr & Singh et al., 2018, Current Biology). The second, a descriptive project, applies tools of computational social science to the recently-created Natural History of Song corpora (http://naturalhistoryofsong.org) to demonstrate universals and dimensions of variation in musical behaviors and musical forms (Mehr et al., working paper, https://psyarxiv.com/emq8r).

Samuel Mehr is a Research Associate in the Department of Psychology at Harvard University, where he directs the Music Lab. Originally a musician, Sam earned a B.M. in Music Education from the Eastman School of Music before diving into science at Harvard, where he earned an Ed.D. in Human Development and Education under the mentorship of Elizabeth Spelke, Howard Gardner, and Steven Pinker.

16h-17h – Dr Nicolas Baumard (Ecole Normale Supérieure, Paris)

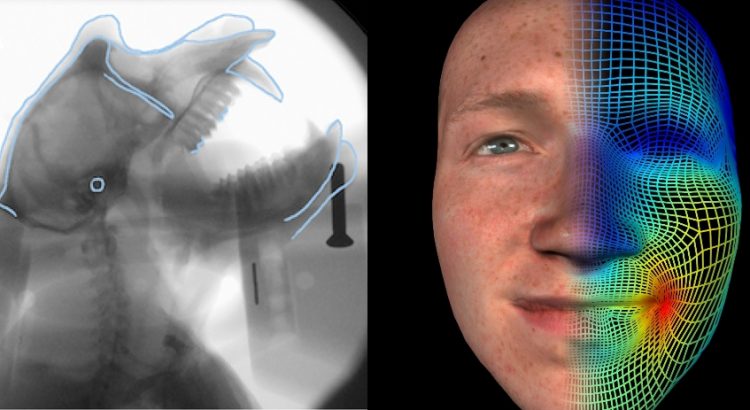

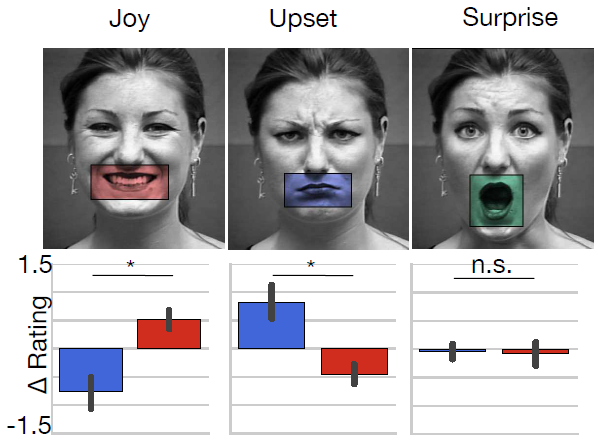

Psychological Origins of Cultural Revolutions

Social trust is linked to a host of positive societal outcomes, including improved economic performance, lower crime rates and more inclusive institutions. Yet, the origins of trust remain elusive, partly because social trust is difficult to document in time. Building on recent advances in social cognition, we designed an algorithm to automatically generate trustworthiness evaluations for the facial action units (smile, eye brows, etc.) of portraits in large historical databases. Our results show that trustworthiness in portraits increased over the period 1500 – 2000 paralleling the decline of interpersonal violence and the rise of democratic values observed in Western Europe. Further analyses suggest that this rise of trustworthiness displays is associated with increased living standards.

Nicolas Baumard is a CNRS researcher in the Department of Cognitive Sciences at the École Normale Supérieure in Paris, working in the Evolution and Social Cognition team, at Institut Jean-Nicod. His work uses evolutionary and psychological approaches in the social sciences, in particular in economics and history. More specifically, his recent work has used reciprocity theory (in particular partner choice) to explain why moral judgments and cooperative behaviors are based on considerations of fairness; and Life-history theory to explain behavioral variability across culture, history, social classes and developmental stages.

17h-18h – Pascal Dusapin – The Lullaby Experience Project

Update (18/4): The participation of Pascal Dusapin has been cancelled due to unforeseen circumstances.

Lullaby Experience est un projet participatif imaginé par le compositeur Pascal Dusapin, ouvert à tous, enfants et adultes, partout dans le monde. Chacun de nous possède, inscrit au plus profond de lui-même, une mélodie qui a marqué son enfance. Souvent, cette comptine a été déformée par le temps et la mémoire. C’est ce souvenir que l’on vous demande de chanter, de chuchoter. Les enregistrements collectés fourniront la matière sonore utilisée par le compositeur pour la création musicale Lullaby Experience. Transformés et assemblés, ils dessineront le portrait sonore de chaque ville où l’œuvre sera présentée. La création française de Lullaby Experience aura lieu au CENTQUATRE à Paris en Juin 2019.

Pascal Dusapin fait ses études d’arts plastiques et de sciences, arts et esthétique à l’Université de Paris-Sorbonne. Entre 1974 et 1978 il suit les séminaires de Iannis Xenakis. De 1981 à 1983 il est boursier de la Villa Médicis à Rome. Il reçoit de très nombreuses distinctions dès le début de sa carrière de compositeur. Parmi celles-ci, le Prix symphonique de la Sacem en 1994, le Grand prix national de musique du ministère de la Culture en 1995 et le Grand prix de la ville de Paris en 1998. La Victoire de la musique 1998 lui est attribuée pour le disque gravé avec l’Orchestre national de Lyon, puis de nouveau en 2002, comme « compositeur de l’année ». En 2005, il obtient le prix Cino del Duca remis par l’Académie des Beaux-arts. Il est Commandeur des Arts et des Lettres. Il est élu à la Bayerische Akademie der Schönen Künste en juillet 2006. En 2006 il est nommé professeur au Collège de France à la chaire de création artistique. En 2007, il est lauréat du Prix international Dan David, un prix international d’excellence récompensant les travaux scientifiques et artistiques et qu’il partage avec Zubin Metha pour la musique contemporaine. En 2014, il est Chevalier de l’Ordre national de la Légion d’honneur.

Friday April 19th, 11h, Ecole Normale Supérieure.

Salle séminaire du pavillon jardin, 29 rue d’Ulm.

Additional lecture by Sam Mehr: The Origins and functions of music in infancy

In 1871, Darwin wrote, “As neither the enjoyment nor the capacity of producing musical notes are faculties of the least use to man in reference to his daily habits of life, they must be ranked among the most mysterious with which he is endowed.” Infants and parents engage their mysterious musical faculties eagerly, frequently, across most societies, and for most of history. Why should this be? In this talk I propose that infant-directed song functions as an honest signal of parental investment. I support the proposal with two lines of work. First, I show that the perception and production of infant-directed song are characterized by human universals, in cross-cultural studies of music perception run with listeners on the internet; in isolated, small-scale societies; and in infants, who have much less experience than adults with music. Second, I show that the genomic imprinting disorders Prader-Willi and Angelman syndromes, which cause an altered psychology of parental investment, are associated with an altered psychology of music. These findings converge on a psychological function of music in infancy that may underlie more general features

of the human music faculty.